PROJECT SUMMARY

INDUSTRY

SaaS

COLLABORATION

Team augmentation

CHALLENGE

Create a competitive advantage by enabling clients to deploy the platform on their own servers.

TECH STACK

RESULTS

6X

growth in clients

300,000

new users

15

microservices deployed

Imagine reaching the ceiling of what your current tech infrastructure can handle while your business is ready to soar. That’s exactly the challenge our client faced. Their collaborative workspace platform was bursting at the seams on Heroku, and they needed room to grow – fast.

This case study details how Setronica’s engineering team transformed the client’s infrastructure through containerization and Kubernetes orchestration, resulting in remarkable business growth.

Client background and challenge

For three years, Setronica has been supporting a collaborative workspace service designed for teams:

- Keeps business processes in one centralized location.

- Serves 2.2 million monthly active users.

- Maintains an ISO 27001:2013 certified information security management system.

“We need to move everything off Heroku and onto our own servers – and our users want to run our services on their infrastructure too.” That was essentially the brief when the client first approached us. Not just a simple lift-and-shift migration, but a complete rethinking of how their application was deployed and delivered.

With the complexity involved, we assembled a team of three DevOps engineers and a project manager to help the client.

Phase 1. Containerization and MVP development

The first one-month sprint was dedicated to creating an MVP. First order of business – getting everything containerized with Docker. We built instructions for launching all the key components – PostgreSQL (with all its extensions), MongoDB, Redis, and RabbitMQ – in official Docker images.

We set up a private repository on Docker Hub to keep track of all the container versions – critical for maintaining order in what could easily become chaos. Next came the docker-compose files that orchestrated how everything would work together.

Nginx was configured as the front door, handling incoming traffic and routing requests to the right containers.

To make sure everything stayed reliable, we wired up CI/CD pipelines with automated testing. By month’s end, we had the client’s application running in containers on a VPS – a flexible, portable foundation we could build on.

Want to reduce errors and boost efficiency?

Talk to our experts for free about optimizing your DevOps.

We’ll get back to you within 1 business day to suggest possible next steps.

Phase 2. Building the full-scale infrastructure

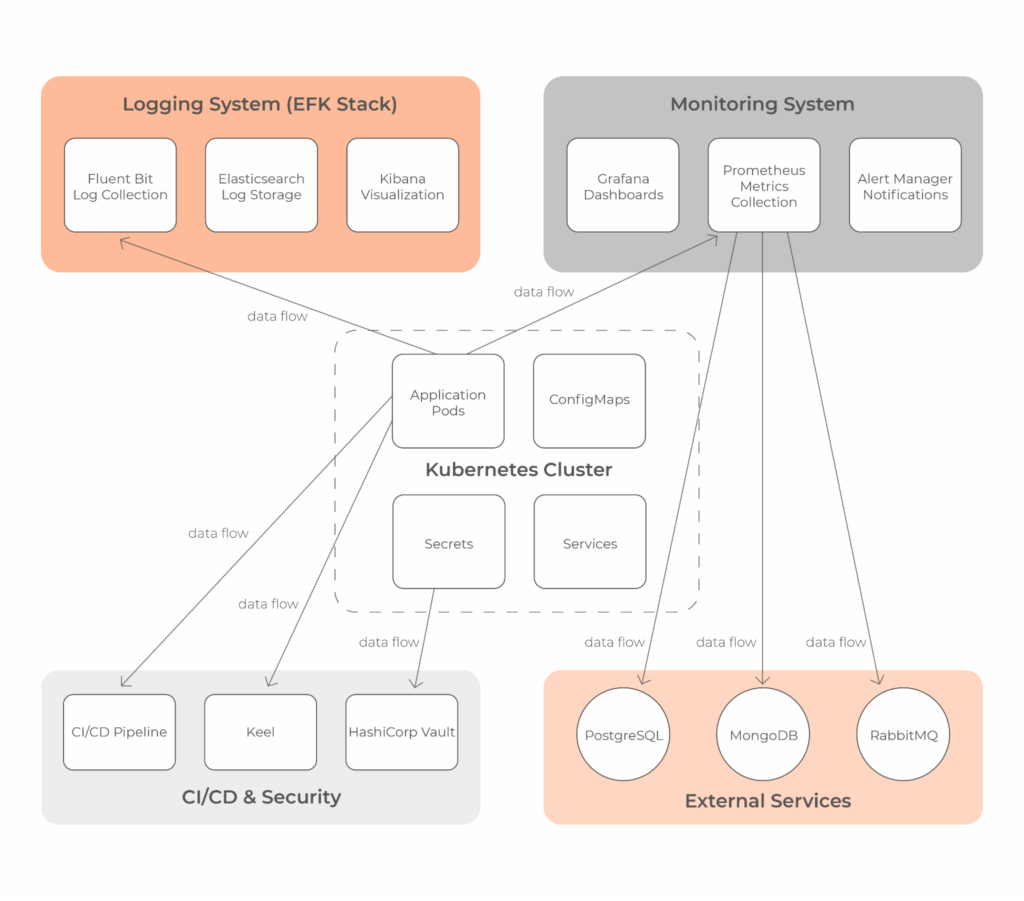

With our MVP running, we spent the next two months building out the infrastructure. We created a logging system for Kubernetes clusters and all services. The team configured Kibana for access management, implemented the EFK stack for collecting logs, and set up alerts for critical services like PostgreSQL and RabbitMQ.

The logging system was also user-friendly, showing real-time application output and service launch information. All logs were kept for at least two weeks, with room to grow if needed.

We also built a monitoring system that kept an eye on everything – container resources, server RAM, CPU usage, disk space, and critical OS metrics like open file descriptors. For the application itself, we tracked throughput and response times, with the ability to analyze performance patterns over time.

During this phase, we also set up production environments on Kubernetes, configured Vault for keeping secrets secure, and extended our CI/CD pipelines for thorough testing.

Phase 3. The big migration

In the final phase, we brought it all together. We set up a private Docker repository with Harbor to store all the container images, then successfully migrated all 15 microservices to the new environment.

As users transitioned to the new platform, the numbers took off:

- Client companies jumped from 150,000 to 900,000 – a sixfold increase.

- The platform welcomed 300,000 new users, growing to serve 2 million people monthly.

Impact

The Docker deployment solution we built gave the client exactly what they needed – code that reduced server load, kept applications running smoothly, and made updates faster. For the first time, they could deploy applications on their own servers without performance headaches.

With the automated Kubernetes deployment system using Keel, their developers could push verified code to production with confidence and roll back changes with a click if anything went sideways.

The monitoring and alerting systems became the client’s early warning system as they scaled. With dashboards showing the health of their infrastructure and instant alerts for any issues, their tech team could spot and fix problems before users noticed.

We even built a safety net – Terraform code for quickly spinning up backup infrastructure on another cloud provider if their primary one had issues. This proved its worth several times, dramatically cutting downtime during outages.

The client gained control over their environment, the scalability to support massive growth, and the reliability their expanding user base demanded. Most importantly, they gained independence – the freedom to chart their own course in a competitive market without being boxed in by third-party platforms.

Does your infrastructure need rebuilding from the ground up? Setronica is ready to help you! Contact us via the form below to discuss our involvement in your project.

Chapters

- Client background and challenge

- Phase 1. Containerization and MVP development

- Phase 2. Building the full-scale infrastructure

- Phase 3. The big migration

- Impact