Remember when computers could only follow exact instructions? Over the past few decades, artificial intelligence has grown from basic calculator-like systems into sophisticated tools that can recognize images, translate languages, and even generate creative content.

The latest advancement in this journey are AI thinking models. Unlike older AI systems that were good at single, specific tasks, these new models attempt to mimic how humans think.

In this article, we’ll break down what these AI thinking models are and how they’re different from previous technologies. We’ll also look at their limitations and the challenges they present as they become more integrated into our daily lives.

What is an AI thinking model?

The quest to create machines that “think” dates back to the 1950s, when Alan Turing and John McCarthy first explored whether machines could simulate intelligence. Early attempts focused on creating rule-based systems that could perform logical reasoning – like the expert systems of the 1970s and 1980s that followed precise if-then rules to reach conclusions.

The breakthrough came when researchers shifted from telling computers exactly what to do to creating systems that could learn from data. By the 2010s, this approach led to neural networks that could recognize patterns in vast amounts of information. Today’s AI thinking models build on these foundations, combining pattern recognition with more structured forms of knowledge and reasoning.

An AI thinking model is a computational framework designed to process information, solve problems, and make decisions in ways that mirror aspects of human cognitive processes.

When we talk about AI “thinking,” we’re suggesting these systems have consciousness or self-awareness. However, machines don’t possess these qualities.

What AI thinking models do share with human cognition are certain functional capabilities:

- Processing and organizing information

- Recognizing patterns and making predictions

- Adapting strategies based on feedback

- Applying knowledge from one context to another

The key difference: human thinking emerges from biological processes we don’t fully understand, while AI thinking models are engineered systems following mathematical operations, however complex.

Characteristics of AI thinking models

Several features distinguish AI thinking models from simpler computational systems:

- Knowledge representation: They maintain internal representations of information that can be accessed, updated, and connected in meaningful ways.

- Reasoning capabilities: They can follow chains of logic to reach conclusions or generate explanations for their outputs.

- Adaptability: They can adjust their responses based on new information without requiring complete reprogramming.

- Context sensitivity: They consider relevant context when interpreting information, rather than processing each input in isolation.

- Generalization: They can apply concepts learned in one situation to new, previously unseen scenarios.

- Multi-step processing: They can break complex problems into manageable steps and coordinate between different types of reasoning.

- Uncertainty handling: They can operate effectively even with incomplete information, providing confidence levels rather than just binary answers.

These characteristics allow AI thinking models to tackle problems that were previously considered the exclusive domain of human intelligence.

Differences from classical AI models

Classical AI attempted to program intelligence explicitly, with human experts encoding specific rules and knowledge. Modern AI thinking models, by contrast, develop their capabilities through exposure to vast amounts of data, discovering patterns and relationships that would be impossible to program manually.

This shift represents more than just a technical evolution – it’s a completely different philosophy about how machines can develop intelligent behaviors. The following table highlights the key differences between these approaches:

Classical AI Approaches

Modern AI Thinking Models

Rule-based systems

Rely on explicit if-then rules created by human experts. Performance depends entirely on the quality and completeness of these manually created rules.

Example: Medical diagnosis systems from the 1980s that followed decision trees created by doctors.

Statistical learning approaches

Learn patterns directly from data without requiring explicit rules. Can discover subtle relationships that human experts might miss.

Example: Modern medical AI that learns to identify diseases from millions of patient records and medical images.

Symbolic AI

Represents knowledge using symbols and logical relationships that humans can directly interpret. Manipulates these symbols according to formal logic rules.

Example: Chess programs that represent the board state with symbols and evaluate moves using logical rules.

Connectionist models

Represent knowledge as patterns of activation across networks of simple units. Knowledge is distributed throughout the network rather than stored in discrete symbols.

Example: AlphaZero learning chess strategy through patterns discovered during millions of self-play games.

Deterministic reasoning

Given the same inputs, always produces exactly the same outputs. Follows precise, predictable logical paths.

Example: A tax calculation program that always computes the same tax amount for identical financial inputs.

Probabilistic reasoning

Incorporates uncertainty into its processing, providing confidence levels rather than singular answers. Can consider multiple possible interpretations.

Example: A modern AI assistant considering multiple interpretations of an ambiguous question before responding.

Explicit knowledge representation

Stores information in human-readable formats like rules, ontologies, or semantic networks. Knowledge is discrete and directly editable.

Example: An expert system with a database of explicitly defined facts about a specific domain.

Implicit knowledge representation

Encodes knowledge in mathematical weights and biases distributed throughout neural networks. Knowledge emerges from patterns rather than being explicitly defined.

Example: A language model that captures grammar rules implicitly through statistical patterns rather than explicit grammatical rules.

Manual engineering

Requires human experts to explicitly program every capability and rule. Systems improve only when humans update their programming.

Example: A classical spam filter with manually created rules about what constitutes spam.

Autonomous learning

Systems improve automatically through exposure to data, often discovering unexpected solutions. Human input shifts to designing learning architectures rather than programming specific behaviors.

Example: A modern spam filter that continuously learns new patterns of suspicious messages without explicit updates to its rules.

Single-task specialization

Systems are designed for specific, narrowly defined tasks and cannot transfer knowledge between different domains.

Example: A specialized program that only plays checkers and cannot apply its strategic knowledge to other games.

Multi-domain capabilities

Can apply learning across different domains and tasks, demonstrating flexibility similar to human transfer learning.

Example: A large language model that can write poetry, explain science concepts, and generate computer code using the same underlying knowledge.

Curious about AI for your industry?

Get a free assessment of where AI could help your specific business.

We’ll get back to you within 1 business day to suggest possible next steps.

How AI thinking models work

Now, let’s pull back the curtain and explore how these systems actually function.

Architectural components and design principles

Modern AI thinking models, particularly those based on the transformer architecture, are built like complex, interconnected networks that process information in layers. Think of them as a series of specialized departments in a company that each handle different aspects of a problem before passing their work to the next department:

- Input processing layer: Transforms raw data (like text or images) into a format the model can work with.

- Embedding layers: Convert inputs into rich numerical representations that capture meaning.

- Attention mechanisms: Allow the model to focus on relevant parts of the input.

- Processing blocks: Perform complex calculations and transformations on the data.

- Output layer: Converts processed information back into a useful format (like text or decisions).

The design principle that revolutionized modern AI was the shift from sequential processing (handling information one piece at a time) to parallel processing (examining entire sequences at once).

Information processing workflow

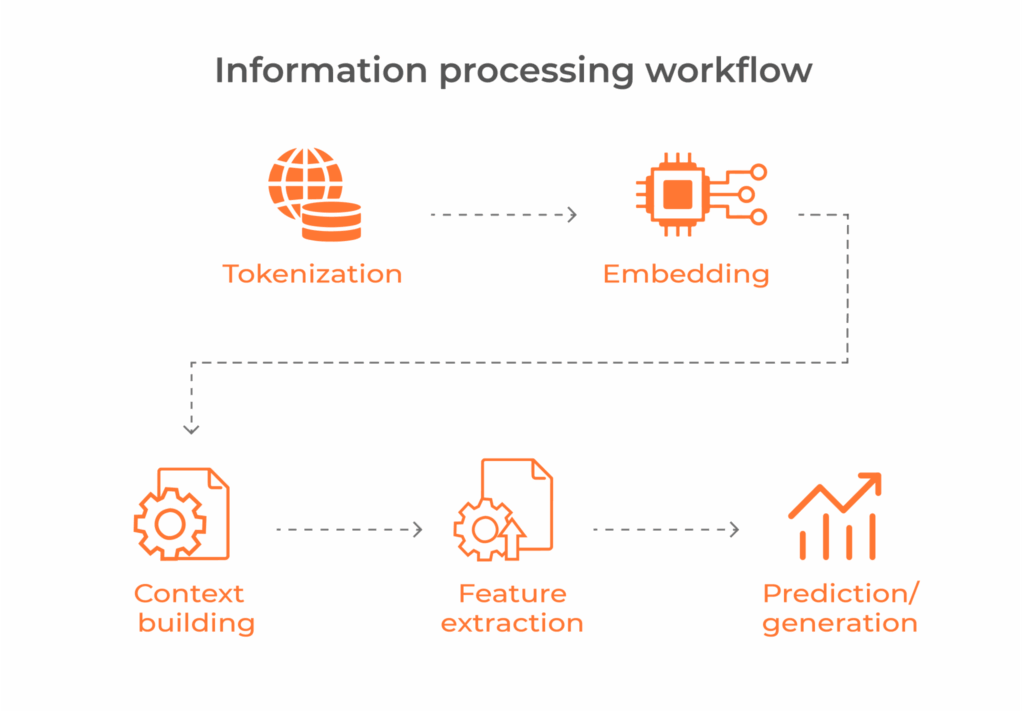

When an AI thinking model receives input, it follows this workflow:

- Tokenization: First, the model breaks down input (like text) into manageable pieces called tokens. For text, these might be words or parts of words.

- Embedding: Each token is converted into a numerical representation – a vector with hundreds of dimensions – that captures its meaning and relationships to other concepts.

- Context building: The model analyzes relationships between all pieces of input simultaneously, rather than sequentially.

- Feature extraction: The model identifies important patterns and features in the data.

- Prediction/generation: Based on the processed information, the model produces outputs like predictions, classifications, or generated content.

This workflow isn’t strictly linear – modern AI thinking models create complex webs of information processing where different components interact and influence each other.

Training methodologies and data requirements

Models are initially trained on vast datasets – sometimes billions of examples – to learn general patterns and relationships. This phase can take weeks or months on specialized hardware.

Many modern models learn by predicting missing pieces of information. That’s called self-supervised learning. For example, language models might be trained by removing random words from sentences and learning to predict what’s missing.

After pre-training, models are specialized for particular tasks using smaller, more focused datasets.

Inference mechanisms and computational processes

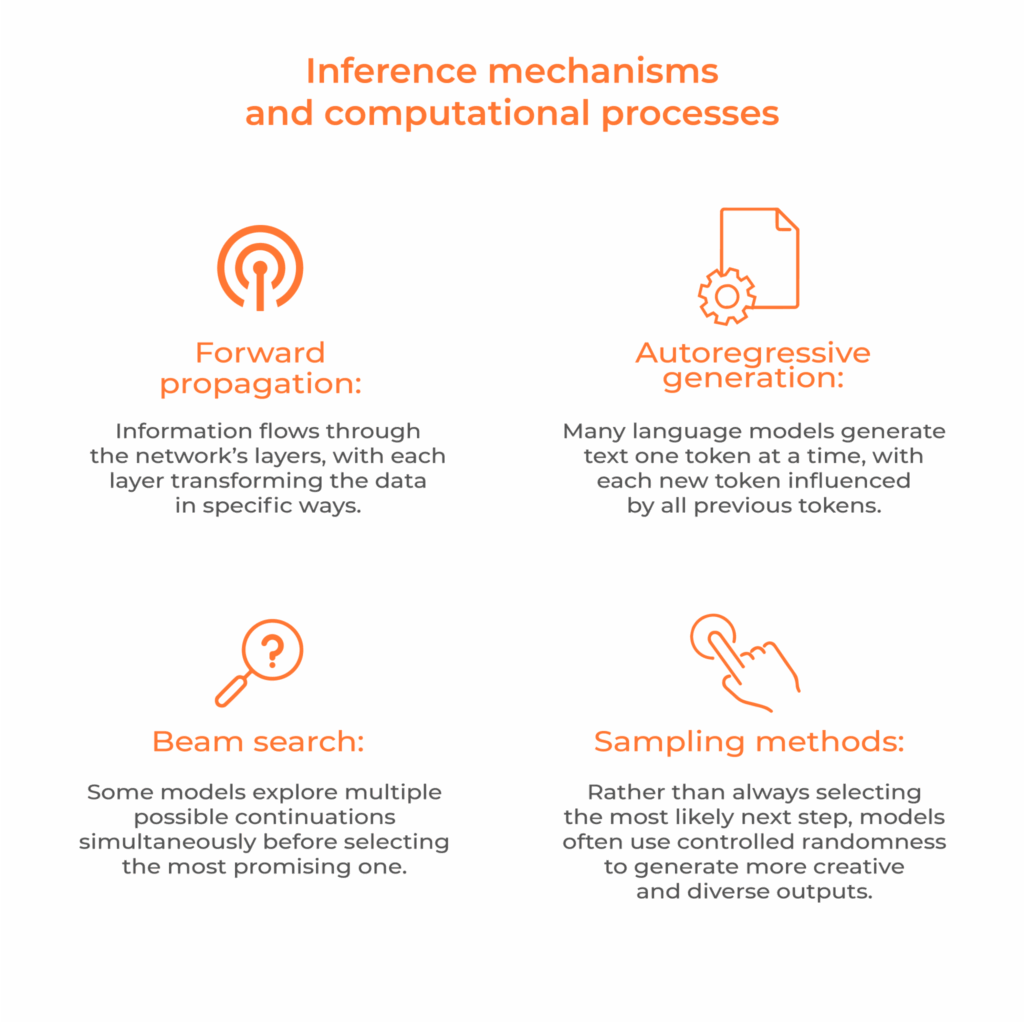

Once trained, AI thinking models use various computational approaches to generate responses or make decisions:

Attention mechanisms and context handling

One of the most important breakthroughs in modern AI thinking models is the attention mechanism. Traditional neural networks struggled to maintain context over long sequences – imagine trying to keep track of the subject of a conversation that spans many paragraphs.

Attention mechanisms solve this by allowing the model to “focus” on relevant parts of the input when making predictions or generating outputs:

- The model calculates the relevance of each input element to each other element.

- This creates a map of relationships and dependencies across the entire input.

- When generating a response, the model can “attend” to the most relevant parts of the input.

For example, when answering a question, an AI model might pay special attention to words in the input that relate directly to the question being asked, rather than treating all words as equally important.

Working memory implementation

Unlike humans, AI thinking models don’t have a separate short-term memory system. Instead, they implement a form of “working memory” through:

- State preservation: Maintaining information about the current conversation or task.

- Context windows: Limiting how much previous information the model can access at once (often measured in tokens).

- Activation patterns: Distributed patterns of activity across the network that represent current information.

Limitations and challenges

While AI thinking models have made remarkable progress, they still face significant limitations that highlight the difference between artificial and human intelligence.

The “black box” problem

Modern AI thinking models, particularly deep learning systems, often operate as “black boxes” – we can see their inputs and outputs, but the internal reasoning process remains non-transparent.

❓ Why it matters: In critical applications like healthcare, finance, or legal decisions, we need to understand why an AI reached a specific conclusion. When a doctor recommends treatment, they can explain their reasoning; when many AI systems make recommendations, they cannot.

💡 Current approaches: Researchers are developing “explainable AI” techniques that aim to make these processes more transparent, but there’s a fundamental tension between the complexity that gives these models their power and our ability to understand their reasoning.

The hallucination problem

AI thinking models – especially large language models – sometimes generate information that sounds plausible but is factually incorrect or entirely fabricated. This phenomenon, known as “hallucination,” occurs because these models are fundamentally pattern-matching systems trained to produce plausible outputs rather than factually verified ones.

❓ Why it matters: When AI systems present incorrect information with the same confidence as accurate information, users can be misled, sometimes with serious consequences.

💡 Current approaches: Models are being fine-tuned to be more cautious in their assertions, and retrieval-augmented systems that check facts against trusted sources are being developed.

Data quality and bias

AI thinking models learn from the data they’re trained on – including any biases, errors, or gaps in that data. This leads to systems that may:

- Perpetuate historical biases

- Perform poorly for underrepresented groups

- Make confident predictions in domains where training data was limited or flawed

❓ Why it matters: As these systems are deployed in sensitive contexts like hiring, lending, or healthcare, biased outputs can cause real harm to affected individuals and groups.

💡 Current approaches: Researchers are developing techniques to audit models for bias, creating more representative datasets, and designing training methods that prioritize fairness.

Context limitations

Current AI thinking models can process only limited amounts of information at once—typically a few thousand words for text models. This “context window” limitation means they:

- Can “forget” information from earlier in a conversation

- Struggle with tasks requiring integration of information across very long contexts

- Cannot maintain an understanding of complex situations over extended interactions

❓ Why it matters: Many real-world problems require considering large amounts of information simultaneously or remembering important details over long periods.

💡 Current approaches: Newer models feature expanded context windows, and researchers are developing architectures specifically designed to handle longer-term information.

Ethical and societal challenges

Beyond technical limitations, AI thinking models raise profound ethical questions:

- Privacy concerns: Models trained on vast datasets may inadvertently memorize sensitive personal information.

- Misuse potential: Advanced language models can be used to generate misinformation or malicious content.

- Job displacement: As these systems become more capable, they may automate tasks currently performed by humans.

- Consent issues: People whose data was used for training rarely provided explicit consent for this use.

❓ Why it matters: Technology development doesn’t happen in a vacuum – these systems are being integrated into society now, often before we’ve developed appropriate governance frameworks.

💡 Current approaches: Multidisciplinary teams of ethicists, policymakers, and technical experts are working to develop responsible AI guidelines, though much work remains to be done.

Conclusion

AI thinking models represent a remarkable leap in our ability to create systems that process information in increasingly human-like ways. They’re already transforming industries and expanding what’s possible with technology.

In the next part, we’ll discuss some real-world applications of AI thinking models and compare them against each other.

Ready to explore how AI thinking models can drive results for your business? Schedule a 30-minute consultation with Setronica! We’ll assess your specific business challenges, identify high-impact opportunities, and create a customized roadmap for integrating these technologies into your operations.

Chapters

- What is an AI thinking model?

- Characteristics of AI thinking models

- Differences from classical AI models

- How AI thinking models work

- Limitations and challenges

- Conclusion