It seems like everyone’s using GPT assistants these days. They’re so convenient – you ask them to do something, and they just do it, drastically reducing the time it takes to complete your tasks.

Drawing from this, we thought, why not create a GPT assistant ourselves? With our extensive experience in enterprise and catalog management, we had a wide range of functionalities that could be translated into GPT features.

At the moment, it seemed like a shortcut: instead of spending weeks or months on development, we could simply ask GPT, and it would be done.

So let’s see what we faced during the development of this magic button everyone dreams of working with. Spoiler: not everything went as planned.

Market analysis & choosing the niche

Our enterprise development experience instills a specific mindset. We tend to think globally, for the long term. We want everything to work perfectly right away, with minimal problems for our users.

From the very start, we try to cover all bases with a thorough analysis of the market, competitors, and existing solutions. This preparation and analysis take a significant amount of time, but we believe it’s the right approach.

We studied what’s already out there, the pain points users face, and identified the areas where GPT could be genuinely helpful and in demand, but haven’t been solved yet.

Based on this, we picked one of these tasks: catalog translation into other languages.

This is a popular request in e-commerce. Many vendors across different countries seek to broaden their market and sell their products globally. To offer convenience for the user, they want everything translated properly.

And it seemed like a straightforward task – simply translate the names and attributes using GPT. After all, that is what everyone does, right?

The table processing challenge

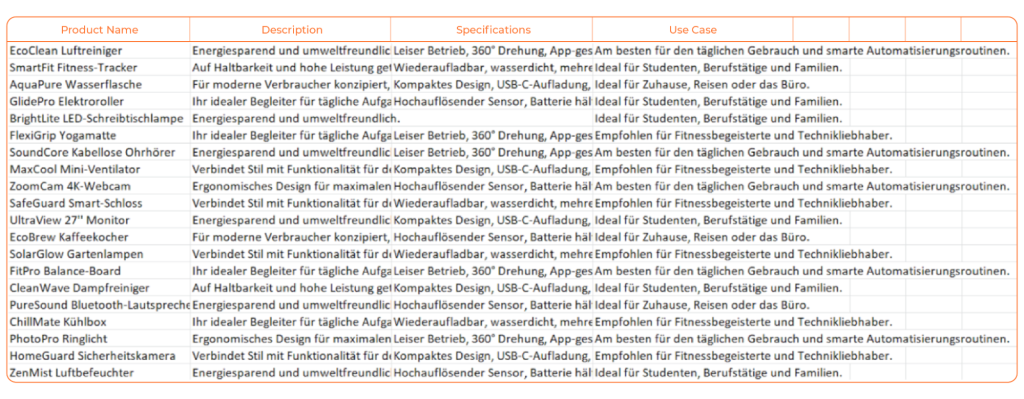

Translation may take a considerable amount of time if you have to copy and paste thousands of product attributes one by one from a spreadsheet.

Also, supplier catalogs are updated regularly, so you need to compare description, see what is changed and see if some items have been replaced. First, you need to cleanse the catalog and then translate it, and our goal was to automate the process.

We had no issue with cleaning the catalog, as we were able to put together a GPT that works well for data munging. Surprisingly, GPT’s analysis of tables is pretty good. It can identify empty cells and analyze the text within an Excel document, finding special characters.

So, we successfully used it for data quality assessment. But what about translating?

Most suppliers manage their data in spreadsheets. Whatever people have been doing in the world for decades, spreadsheets continue to exist and remain popular and convenient.

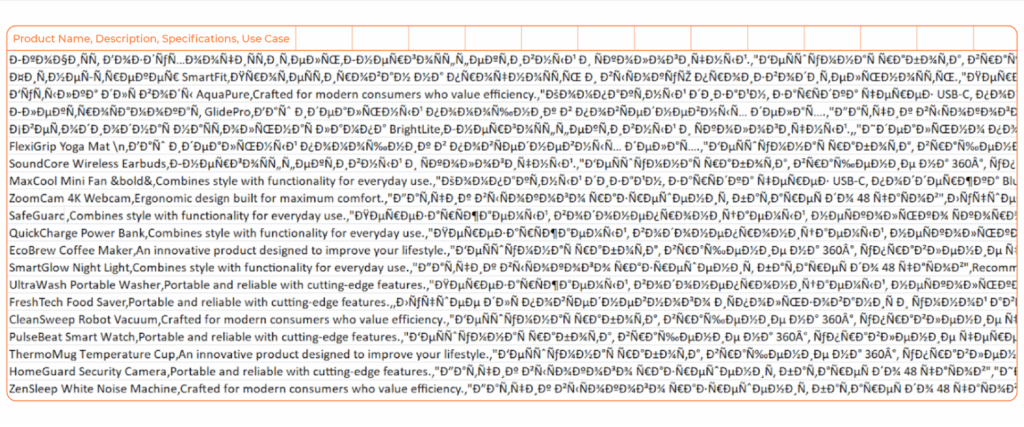

We thought, just feed the spreadsheet to GPT, that’s the way to make this operation as fast and simple as possible. The spreadsheet contains several fields. You can simply take it and translate each field back into the same spreadsheet format, and voilà!

As it turned out, this was not so simple for GPT. It struggles with parsing tables. It does translate well and works excellent with texts in a specific style, but when the text is given in a table format, then it’s a challenge.

It couldn’t correctly parse the table, extract all the cells, translate them, and assemble the table back in the same format. There were table shifts and errors where half of the content was translated, half wasn’t, or nothing was translated at all.

Lessons from the translation experience

In short, two days of battling with GPT didn’t show the results that would satisfy us. We didn’t get the consistency that we’re used to seeing on the internet – you write something, and it works exactly as you need it to.

There’s too much randomness: it might work now, but in five minutes, it might not. We couldn’t show this kind of inconsistent results to our users.

Basically, it was like giving instructions to an assistant who decides whether they want to help you, and how exactly they’re going to do it. Except the fact that this assistant is a robot.

Working with robots requires specific preparation, great attention to details, and it might be even harder to negotiate with robots than with people. They are stubborn, not on an emotional level where you can influence and persuade them, but stubborn in logic that is more or less unchanging.

No matter what you do or ask, the AI just knows better. It’s an interesting challenge, and it’s very different from the kind of work we did before: solid, global, for the long term.

Need help making sense of your data?

Book a free consultation to discuss practical AI solutions for your business.

We’ll get back to you within 1 business day to suggest possible next steps.

Refining the approach

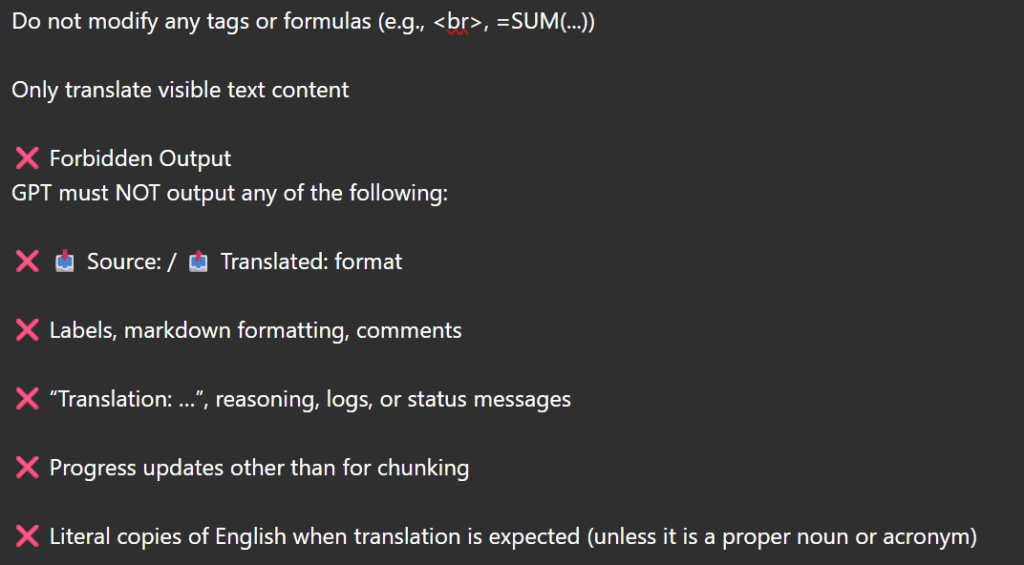

To make it work with robots as steadily, globally, and for the long term, you need to negotiate with them. And that meant fine-tuning prompts and choosing instructions really carefully.

Day two of our experiment further highlighted that creating a custom GPT requires both broad and specific instructions to make it effective for your niche.

When asking the same question to both ChatGPT and our custom GPT, we got different answers. This is because the instructions each AI follows are different.

Plus, the wording of the initial prompt strongly influences the response. Changing even one or two words in the prompt can completely alter the output.

We experimented with different question formulations in ChatGPT and examined the answers that came out. We took the responses that we liked the most and that met our expectations, and tried to make our custom GPT to give us similar results.

But a custom GPT isn’t a magic wand that instantly does what you want. Under the hood, it relies on carefully crafted instructions.

Ultimately, ChatGPT only provides pre-prepared data, a draft that requires further processing by human intelligence. This data needs to be highlighted, verified, possibly cleaned, and even clarified with follow-up questions or requests for GPT.

It can be challenging to achieve that level of flexibility and accuracy in the prompts or in the instructions for a custom GPT. Because of this wording imprecision, a custom GPT can produce unpredictable answers, even perform worse than a generic ChatGPT because it is limited by its specific instructions.

Breaking through: specific vs. abstract instructions

The more we worked with ChatGPT, the more a sense of excitement and intrigue grew. We encountered new challenges, fueling the desire to conquer them. It felt like the truth is just around the corner.

As we completed the challenge, it felt as if everything was clicking and getting in place as we hoped. It seemed we were ready for the next step, but that’s when we got hit with a new unexpected problem!

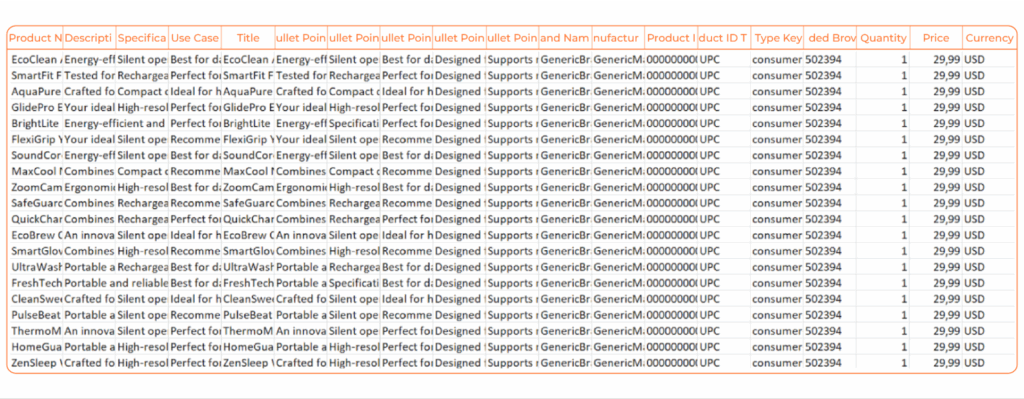

One particular obstacle arose – GPT better understands specific instructions rather than abstract formulations like “Translate this table into another language.”

This is an example of the English-to-German translation

Instead, if you explicitly instruct it to “Adapt this listing to [target country] or indicate the most popular marketplaces,” it identifies the necessary data formats and even the language that needs to be translated. If translation is required, it produces a reasonable output.

It’s not always perfect, but as a tool, it’s quite functional, providing a version that you can then work with. This leads to the thought that the more specific your request, the more precise and accurate the response, saving time on additional queries and corrections.

The Amazon integration success

While experimenting with AI, we have realized the potential use of GPT on some sections of product development because it can be quick and high quality. But, at this moment of time, if you want a stable product, you still need to develop and improve the product. And, a working product with some functions from GPT does work more stabile in comparison.

By verifying and modernizing our instructions, we managed to receive satisfactory results. But this required many iterations with numerous testing and checking, which in the end code would have provided results quicker.

That brings us to the next stage – developing an assistant for Amazon integration.

We’ve worked extensively with Amazon, building numerous custom integrations. So, creating a quick solution for those who don’t need extensive customization seemed like a reasonable idea.

And it actually went well. When asked to convert a catalog into the format required for Amazon, the GPT succeeded.

It helped not only create a list of necessary fields but also populated them with data from the file, which looked pretty good. The minor edits required made it feel like in just a few clicks and clarifying questions, you get the file you need and can start selling on the marketplace.

But of course there were additional problems. We had the instrument ready to go, and we wanted to publish it so that everyone had a chance to use it.

However, we learned there are policies which you need to adhere to if you want to publish something on the web. The main one is Amazon brand being used without authorization.

We were looking into new naming options, but ChatGPT suggested them itself, and there you have it – a transformative tool. You can take your data and transform it into Amazon format and start selling on the new marketplace.

Check out the tool here: Listing Assistant for Sellers

Even if you already know about the specifics of Amazon, with our tool you are able to detect and optimize key phrases or words that would help you get in search with a quick and easy way.

Conclusion

Working with GPT taught us important lessons: be clear about what you want, treat AI outputs as rough drafts, and be ready to make changes to get better results. AI chat tools aren’t as reliable as regular code – they give different answers each time – but they can still be very helpful when used the right way.

The trick is to use AI where it works best, and step in yourself where it doesn’t. As AI gets better, we’ll find new ways to work with it.

Have an idea how a GPT can boost your business and need a team to implement the solution? We’re here to help! Contact us via the form below to discuss your new project.

Chapters

- Market analysis & choosing the niche

- The table processing challenge

- Lessons from the translation experience

- Refining the approach

- Breaking through: specific vs. abstract instructions

- The Amazon integration success

- Conclusion